SciVerse

SciVerse

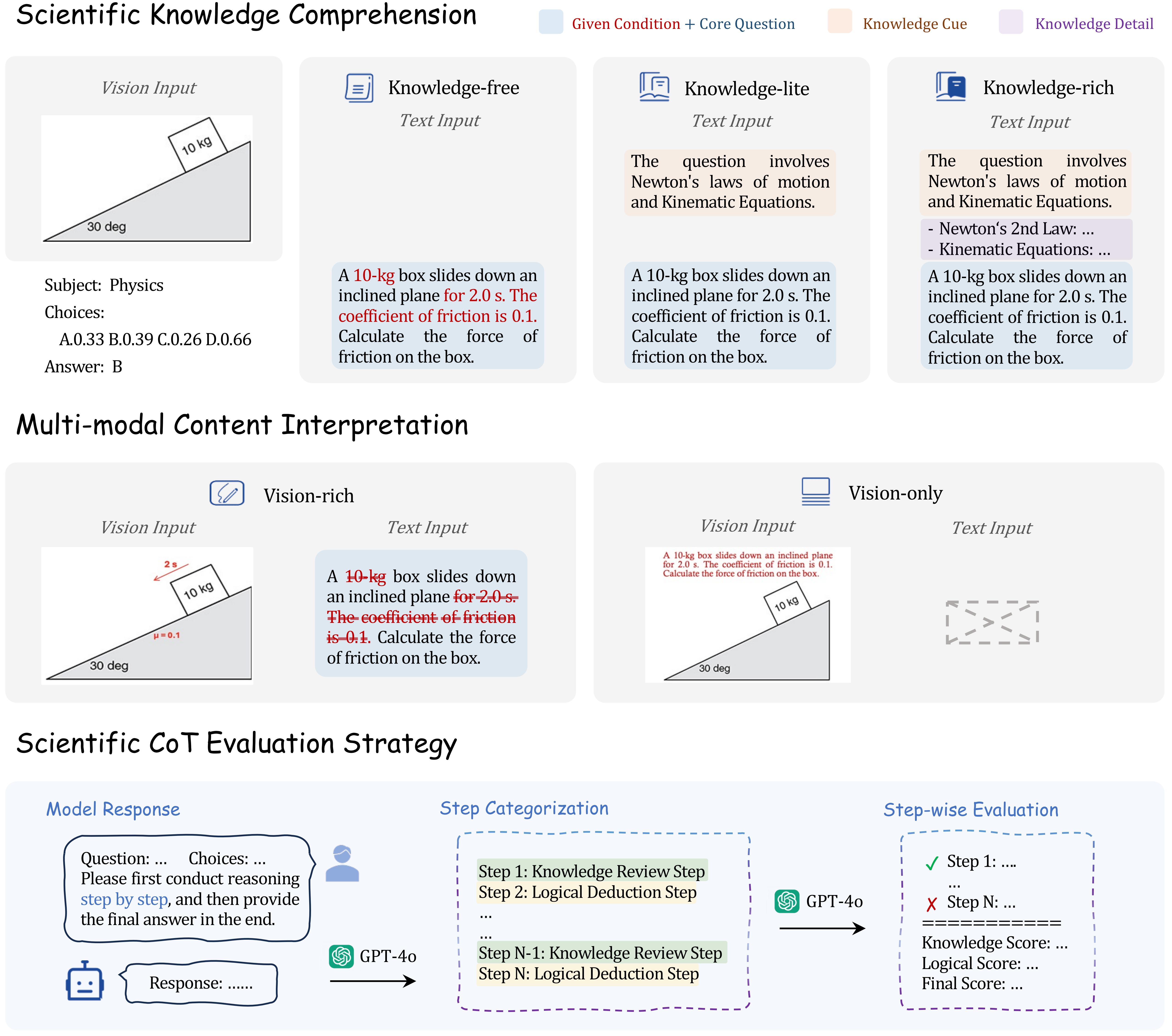

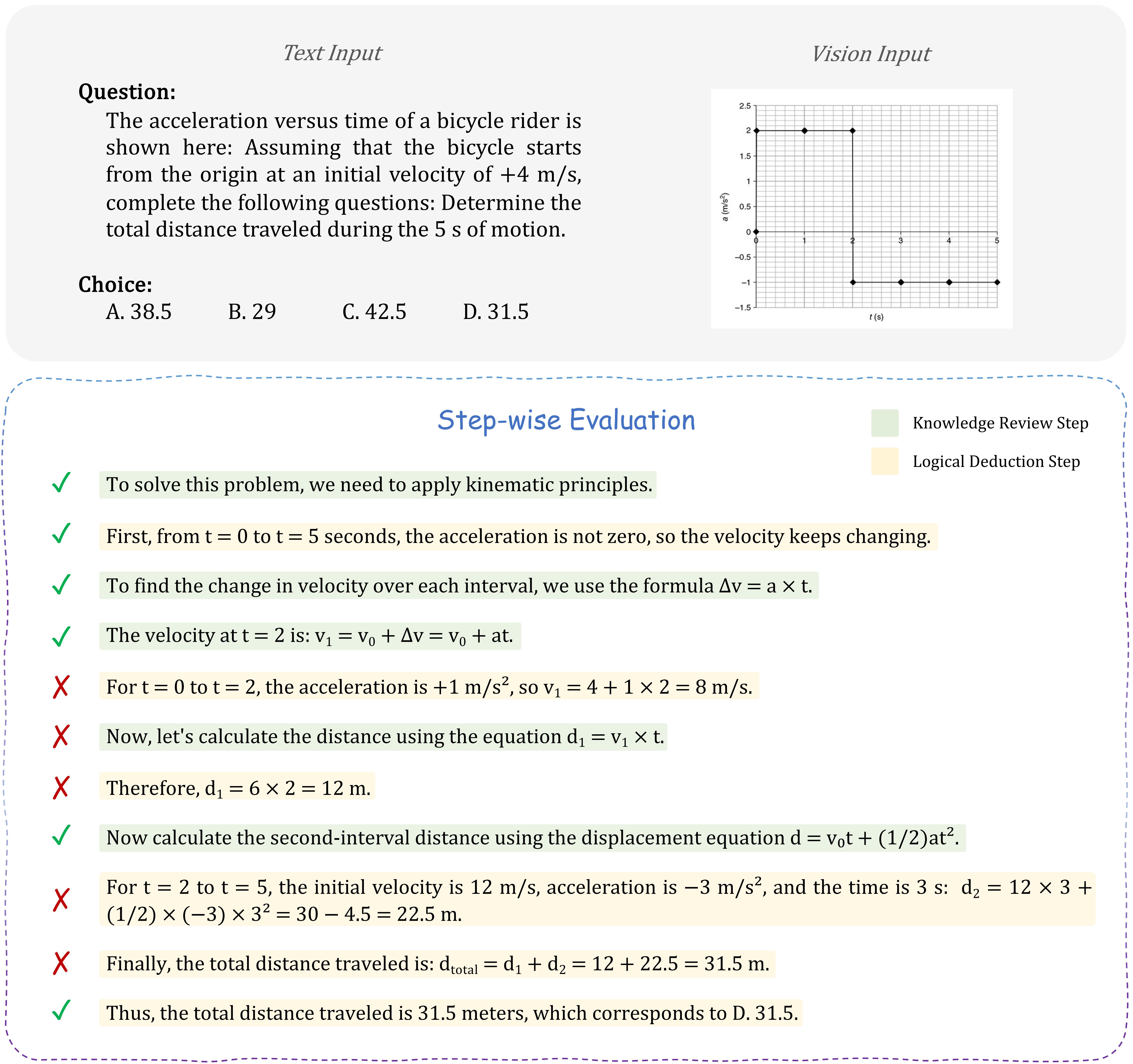

The rapid advancement of Large Multi-modal Models (LMMs) has enabled their application in scientific problem-solving, yet their fine-grained capabilities remain under-explored. In this paper, we introduce SciVerse, a multi-modal scientific evaluation benchmark to thoroughly assess LMMs across 5,735 test instances in five distinct versions. We aim to investigate three key dimensions of LMMs: scientific knowledge comprehension, multi-modal content interpretation, and Chain-of-Thought (CoT) reasoning. To unveil whether LMMs possess sufficient scientific expertise, we first transform each problem into three versions containing different levels of knowledge required for solving, i.e., Knowledge-free, -lite, and -rich. Then, to explore how LMMs interpret multi-modal scientific content, we annotate another two versions, i.e., Vision-rich and -only, marking more question information from texts to diagrams. Comparing the results of different versions, SciVerse systematically examines the professional knowledge stock and visual perception skills of LMMs in scientific domains. In addition, to rigorously assess CoT reasoning, we propose a new scientific CoT evaluation strategy, conducting a step-wise assessment on knowledge and logical errors in model outputs. Our extensive evaluation of different LMMs on SciVerse reveals critical limitations in their scientific proficiency and provides new insights into future developments.

Overview of Five Problem Versions and our Scientific CoT Evaluation Strategy in  SciVerse.

SciVerse.

SciVerse Dataset

SciVerse Dataset

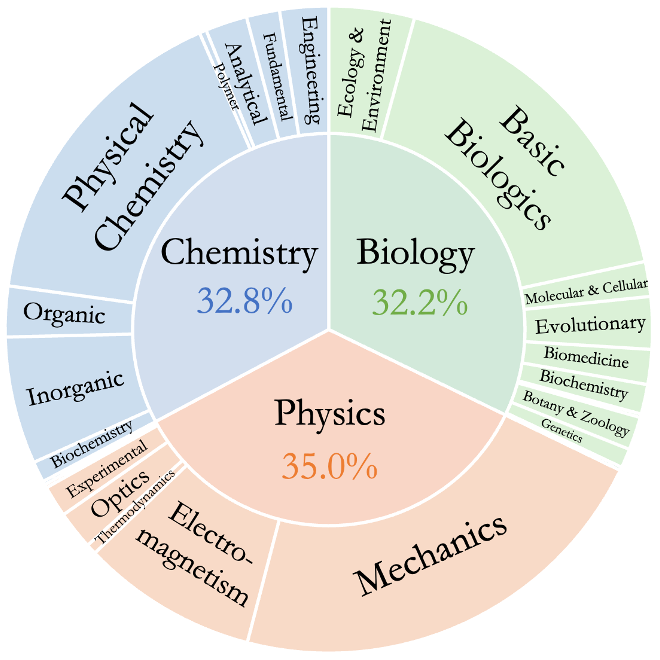

SciVerse consists of 5,735 problems, divided across three major domains: Physics, Chemistry, and Biology. These subjects are further broken down into 21 distinct scientific topics, allowing for an evaluation of problem-solving performance at a granular level.

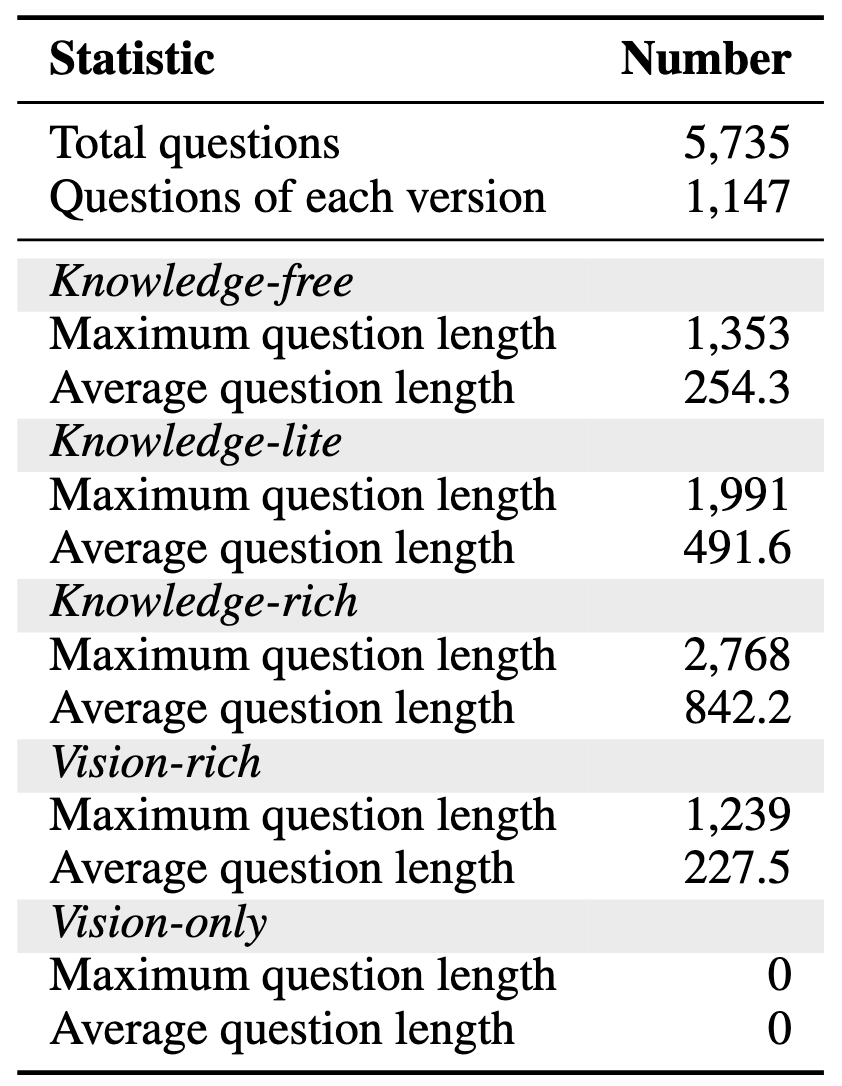

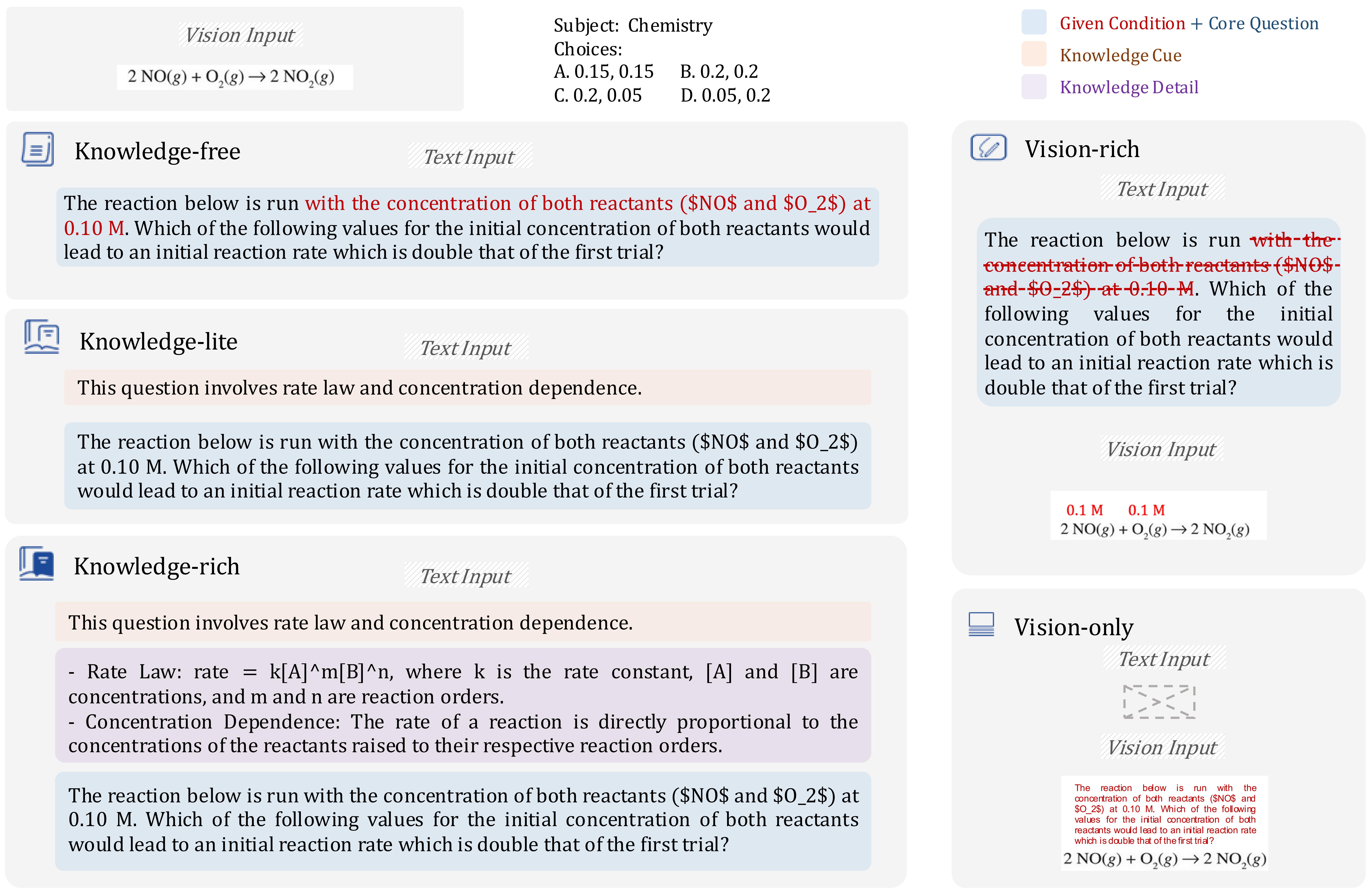

SciVerse consists of 5,735 problems, divided across three major domains: Physics, Chemistry, and Biology. These subjects are further broken down into 21 distinct scientific topics, allowing for an evaluation of problem-solving performance at a granular level.  SciVerse includes five different problem versions, each consisting of 1,147 instances, designed to assess both the knowledge expertise and visual perception capabilities of LMMs. With more knowledge content integrated, the question length, from Knowledge-free, Knowledge-lite, to Knowledge-rich versions, also increases. As the information is gradually transited from texts to diagrams, the question length decreases from Knowledge-lite, Vision-rich, to Vision-only versions.

SciVerse includes five different problem versions, each consisting of 1,147 instances, designed to assess both the knowledge expertise and visual perception capabilities of LMMs. With more knowledge content integrated, the question length, from Knowledge-free, Knowledge-lite, to Knowledge-rich versions, also increases. As the information is gradually transited from texts to diagrams, the question length decreases from Knowledge-lite, Vision-rich, to Vision-only versions.

Key statistics of  SciVerse.

SciVerse.

Subject distribution of  SciVerse.

SciVerse.

Examples of Five Problem Versions in  SciVerse.

SciVerse.

Examples of the Scientific CoT Evaluation Strategy.

Comparison of different problem versions in  SciVerse

SciVerse